-

Články

- Časopisy

- Kurzy

- Témy

- Kongresy

- Videa

- Podcasty

Care that Matters: Quality Measurement and Health Care

Barry Saver and colleagues caution against the use of process and performance metrics as health care quality measures in the United States.

Published in the journal: Care that Matters: Quality Measurement and Health Care. PLoS Med 12(11): e32767. doi:10.1371/journal.pmed.1001902

Category: Policy Forum

doi: https://doi.org/10.1371/journal.pmed.1001902Summary

Barry Saver and colleagues caution against the use of process and performance metrics as health care quality measures in the United States.

Summary Points

There is limited evidence that many “quality” measures—including those tied to incentives and those promoted by health insurers and governments—lead to improved health outcomes.

Despite the lack of evidence, these measures and comparative “quality ratings” are used increasingly.

These measures are often based on easily measured, intermediate endpoints such as risk-factor control or care processes, not on meaningful, patient-centered outcomes; their use interferes with individualized approaches to clinical complexity and may lead to gaming, overtesting, and overtreatment.

Measures used for financial incentives and public reporting should meet higher standards.

We propose a set of core principles for the implementation of quality measures with greater validity and utility.

Everyone aspires to quality health care. Defining “quality” seems straightforward, yet finding metrics to measure health care is difficult, a point recognized by Donabedian [1] almost half a century ago. While we often associate quality with price, Americans have the world’s most expensive health care system, yet have disappointing patient outcomes [2]. As a means to improve outcomes and control costs, payers in the United States, United Kingdom, and elsewhere are increasingly using metrics to rate providers and health care organizations as well as to structure payment.

Payers demand data in order to pay providers based on “performance,” but such quality measures and ratings are confusing to patients, employers, and providers [3,4]. Despite recent flaws in implementing measures for Accountable Care Organizations (ACOs), the Centers for Medicare and Medicaid Services (CMS), which administers national health care programs in the US, is moving towards linking 30% of Medicare reimbursements to the “quality or value” of providers’ services by the end of 2016 and 50% by the end of 2018 through alternative payment models [5]; more recently, CMS announced a goal of tying 85% of traditional fee-for-service payments to quality or value by 2016 and 90% by 2018 [6]. Earlier this year, the Medicare Payment Advisory Commission cautioned that “provider-level measurement activities are accelerating without regard to the costs or benefits of an ever-increasing number of measures” [7].

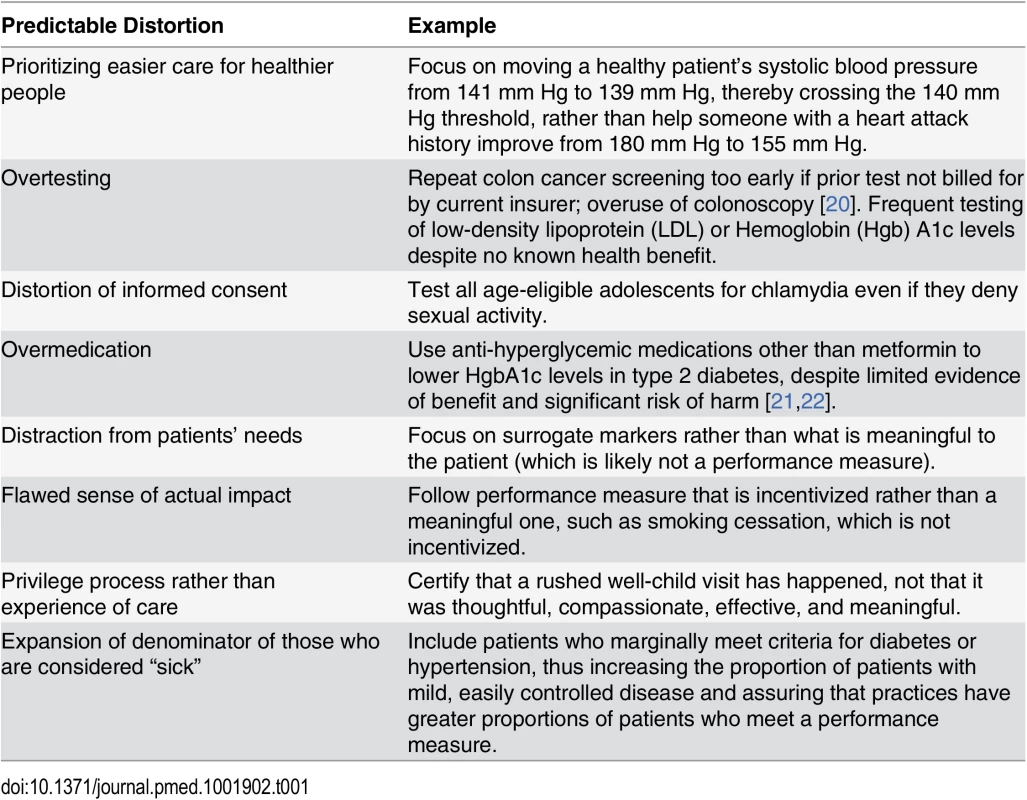

Evidence connecting many quality measures with improved health outcomes is modest, and metrics may be chosen because they are easy to measure rather than because they are evidence-based [8]. The Institute of Medicine (IOM) has warned against using easily obtained surrogate endpoints as quality indicators, because achieving them may not yield meaningful health outcomes [9]. When evidence does exist, the relationship between risk and biomarkers is typically continuous—yet measures often use discrete cutoffs. With payment at stake, clinicians and organizations may be tempted to game the system by devoting disproportionate effort to patients barely on the “wrong” side of a line rather than focusing on those at highest risk [10,11]. Some well-known quality measures do not perform as intended, or may even be associated with harm (e.g., drug treatment of mild hypertension in low-risk persons has not been shown to improve outcomes [the yet-to-be-published SPRINT trial enrolled high-risk participants]; glycemic control with drugs other than metformin in type 2 diabetes may cause harmful hypoglycemia yet fail to appreciably reduce morbidity and mortality) (Table 1 and S1 Table) [12–17]. This phenomenon is described as “virtual quality” [18]. Though some guidelines emphasize shared decision making [19], patient preferences are rarely addressed in guidelines.

Tab. 1. Ways targets distort care (see <em class="ref">S1 Table</em> for further detail and references).

Process and performance metrics are increasingly used as quality measures. One influential US program has stated that its “incentive payments are determined based on quality measures drawn from nationally accepted sets of measures” [23]. But these measures are typically derived from the Healthcare Effectiveness Data and Information Set (HEDIS), whose sponsor states they “were designed to assess measures for comparison among health care systems, not measures for quality improvement” (boldface in original) [24]. ACO quality measures not created by CMS carry the disclaimer that “These performance measures are not clinical guidelines and do not establish a standard of medical care, and have not been tested for all potential applications” [25]. When sponsors attach such disclaimers to their metrics, it is appropriate to question their use in public reporting and financial incentives. Limited understanding of the risks and benefits of testing, difficulties inherent in the communication of complex risk information, and misplacement of trust in advocacy organizations make physicians, patients, and payers susceptible to the erroneous impression that poorly chosen targets are valid and appropriate [26].

In our Massachusetts clinical practices, we have encountered examples of questionable targets across several organizations, such as the following: encouraging unnecessary urine microalbumin testing of diabetic patients already taking angiotensin-converting enzyme inhibitors or angiotensin receptor blockers [27], unnecessary fecal blood testing in patients who had undergone colonoscopy within the past 10 years (but not credited because the current insurance plan had not paid for it) [28], instructing women aged 40–49 years to schedule a mammogram rather than engaging them in shared decision making [29], and encouraging clinicians to start medications immediately for patients with diabetes whose blood pressure, low-density lipoprotein (LDL), or hemoglobin A1c is mildly elevated, rather than following recommendations for lifestyle interventions first [30–32]. Similarly, practitioners in the UK have received incentives for dementia screening, despite criticism that evidence to justify dementia case finding was lacking [33]. If non–evidence-based targets are created and supported with financial incentives, changes in patient care may be inappropriate (Table 1) [8,34].

Fee-for-service has been a critical driver of runaway costs and disappointing health outcomes in the US. “Quality” measures provide newer, different incentives, but may not achieve their purpose and may divert us from more thoughtful approaches and useful interventions, such as addressing social determinants, multimorbidity, and individualized care [35–39].

We believe there must be fundamental change in our approach to quality measurement. Surrogate and incompletely validated measures may, at times, appropriately inform quality improvement, but measures used for public reporting and financial incentives should meet higher standards. We propose an initial set of principles to guide the development of such quality measures (Box 1). Such measures should merit public trust, earn the support of clinicians, and promote the empowerment of patients. Their development should be open and transparent with careful attention to the best evidence of utility.

Box 1. Core Principles for Development and Application of Health Care Quality Measures

Quality measures must:

address clinically meaningful, patient-centered outcomes;

be developed transparently and be supported by robust scientific evidence linking them to improved health outcomes in varied settings;

include estimates, expressed in common metrics, of anticipated benefits and harms to the population to which they are applied;

balance the time and resources required to acquire and report data against the anticipated benefits of the metric;

be assessed and reported at appropriate levels; they should not be applied at the provider level when numbers are too small or when interventions to improve them require the action(s) of a system.

Quality improvement is a continuous process. Our principles were developed through consensus and are neither perfect nor exhaustive; like other guidelines, they will benefit from evaluation and modification. Good quality measures should inform consumers, providers, regulators, and others about the quality of care being provided in a setting, so the primary concerns for good measures are content validity and impact on health.

Implications of the Guiding Principles

We are critical of current quality measures and believe many should be abandoned. In a 2013 US Senate hearing, a representative of business interests urged Medicare and other insurers to sharply reduce the number of indicators being used and “measure and report the outcomes that American families and employers care the most about—improvements in quality of life, functioning, and longevity” [40]. The Institute of Medicine’s 2015 report, Vital Signs: Core Metrics for Health and Health Care Progress, highlights how “many measures focus on narrow or technical aspects of health care processes, rather than on overall health system performance and health outcomes” and finds that the proliferation of measures “has begun to create serious problems for public health and for health care” [41,42].

Another recent editorial, highlighting the importance of intrinsic versus extrinsic motivation for physicians, asks: “How Did Health Care Get This So Wrong?” [43]. In the UK, a study looking at effects of the Quality and Outcomes Framework (QOF)—a broad-based array of metrics designed more systematically than similar efforts in the US—concluded that it appeared to have changed the nature of the consultation so that the biomedical agenda related to the QOF measures is prioritized and the patient’s agenda is unheard [44].

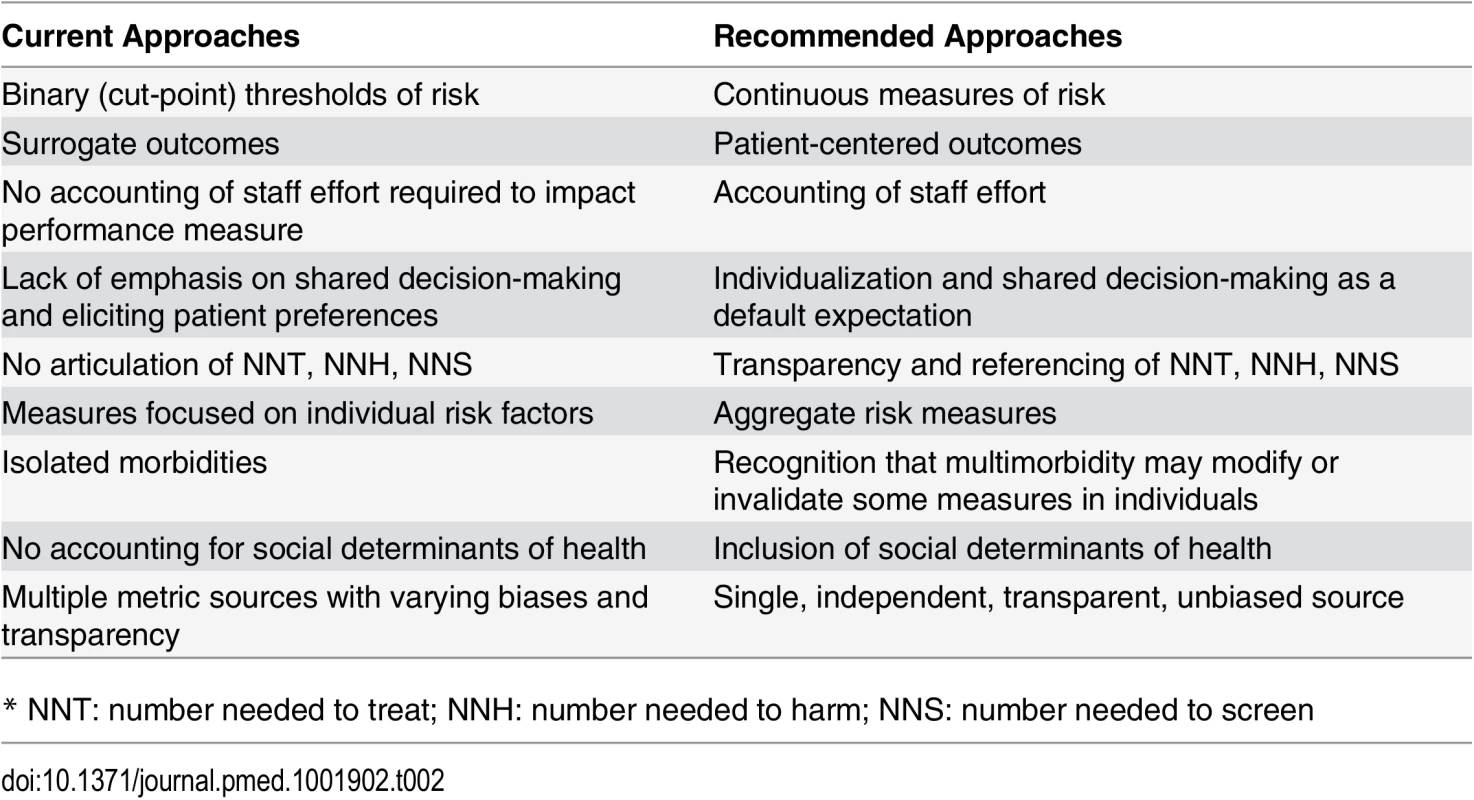

Patient-centeredness means that quality measures need to reflect outcomes experienced and valued by patients. Patient satisfaction is clearly a critical construct, but clinicians have a responsibility beyond maximizing satisfaction. Patient-reported outcomes, such as those being gathered by the US National Institutes of Health PROMIS program (www.nihpromis.org) and the International Consortium for Health Outcomes Measurement (ICHOM) [45], should also be included. In Table 2, we summarize some of the current, flawed approaches to quality measures and our recommendations for approaches consistent with our suggested guiding principles.

Tab. 2. Comparison of typical performance measures and author recommendations.

* NNT: number needed to treat; NNH: number needed to harm; NNS: number needed to screen Our guiding principles (Box 1) first recognize that quality measures should reflect meaningful health outcomes. Surrogate measures do not satisfy this principle. Clinical trial results are often not attained in the real world, and there should be evidence that quality measures do, in fact, substantially improve health outcomes across various locales and practice settings. Verification will often be beyond the capacity of a single organization. Careful analysis of data from large populations will be required to ascertain whether the expected benefits are being produced.

Similarly, extrapolation from observational studies should not be used to determine quality measures—this often confuses treatment outcomes with natural risk factor distributions. Binary measures based on dichotomizing continuous risk factor measurements (e.g., blood pressure, hemoglobin A1c) should not be used. Aggregate risk measures, such as the Global Outcomes Score [10] will usually be preferable to individual risk factors. Quality improvement efforts based on aggregate measures are more likely to yield true health improvements and reduce gaming and other distortions in provider activity; aggregate measures are also more robust than isolated individual risk factors. To maximize use, aggregate measures should be available for use without charge [46]. One could argue either that, while mathematically complex, these measures should be open source to ensure fairness and transparency or, contrarily, that to minimize gaming/reverse engineering, they should be black boxes with trusted curators and frequent updating.

After years of application of “quality” measures tied to incentive payments, many clinicians have adopted practices to optimize revenue. Some of these practices are now out of step with current evidence. It is known that frontline clinicians are slow to integrate changes informed by recent evidence [47]; this problem is compounded when they are paid to do things “the old way.” It is therefore essential that quality measures evolve in a manner that demonstrates a timely response to new evidence; in this way, up-to-date quality measures can be a productive driver of early adoption of evidence-based interventions. The difficulty of changing established practices also highlights that high-stakes quality measures must be chosen carefully, focusing on important health outcomes and based on the most robust evidence.

Quality measures should reflect that a provider has elicited, explored, and honored patient values and preferences, and not merely indicate whether a test or intervention has been performed. To do otherwise strikes at the heart of patient-centered care. Because most health care interventions carry risk of causing harms, measures should reflect overutilization [16,48] as well as underutilization of care.

There should be an estimate of the expected magnitude of improved health from each quality measure. Clinical interventions to decrease smoking have a far greater impact on health than glycemic control for type 2 diabetes [49–51], yet current approaches obscure these relative contributions. Similarly, dental care [52] and effective treatment of alcohol [53] and opiate [54] addiction—highly meaningful to patients and strongly contributory to health (in terms of disability-adjusted life years)—are currently not quality measures. There should also be recognition of the potential for unintended downstream consequences, such as causing harm through interventions that may lead to overdiagnosis/overtreatment and through the associated opportunity costs [55].

We call for recognition that there are costs (time and resources) associated with acquiring and reporting data. The expected benefits of a measure must be weighed against direct and opportunity costs of data collection. Just as the Paperwork Reduction Act in the US requires an accounting of the time required for form completion, quality measures should include an estimate of the costs they will require. These costs may be mitigated to the extent that electronic health records can capture necessary data as part of the care process rather than data entry being required primarily for measurement.

Finally, there should be acknowledgement that improved health is often the result of actions by multiple parties at multiple levels, not individual providers. In many cases, patient action (or inaction) is critical and individual providers have limited influence [56]. Cancer screening or immunization typically is attributable to the influence of a larger system, not an individual clinician. It also means that data must be aggregated to a level where the numbers have statistical meaning, including understanding of signal-to-noise ratios. Also, social determinants of health are often more influential than individual clinicians. Communities or health care systems (e.g., ACOs) could be measured on their abilities to influence social determinants of health, such as food security, housing, etc. Altering these critical social determinants is more desirable than paternalistic or coercive influence through providers.

Making Progress toward True Quality Measures

No simple strategy exists to move forward quickly, and we must guard against implementing flawed measures and then, years later, acknowledging their flaws. The best strategy for durability is likely one that uses the face validity of health—not surrogate outcomes—as the primary goal.

What do we know well enough to act upon? Our answers all come with caveats. Global risk measures are likely to be superior to individual risk factor measurements, but it is difficult to extrapolate from population findings to the individual. Patient satisfaction is an important, albeit incomplete, measure of quality. Many measures should be more preference-driven than we might like to admit, particularly for non-urgent decisions. In addition, asking health care providers to take responsibility for chronic conditions (e.g., hypertension, diabetes, obesity) over-emphasizes the role of the clinician. This is especially relevant when public health interventions that target social and environmental factors are much more likely to influence meaningful outcomes. We propose a set of performance measures that have higher face validity and better relationship to health (some examples are suggested in Box 2).

Box 2. Examples of Patient-Centered Performance Measures

Medication reconciliation in home after discharge

Home visits for indicated patients and coordinated care to meet their needs

Screening for and addressing fall risk

Patient self-assessment of health status (change over time)

Reduction of food insecurity

Ability to chew comfortably and effectively with dentition

Vision assessment and correction in place (e.g., patient has satisfactory glasses)

Hearing assessment and correction in place (e.g., patient has satisfactory hearing aids)

Reduction in tobacco use

Reliable access to home heating and cooling

Reliable transportation to appointments

Provision of effective contraception

Effective addiction care

Effective chronic pain care

Many groups have and will continue to produce metrics they label as “quality measures.” We propose, however, there should be an impartial curator of quality measures to be used for financial rewards or public reporting that should be financially and organizationally independent of potentially biasing groups. The US Preventive Services Task Force (USPSTF) provides a model for such an entity.

The American Association of Medical Colleges (AAMC) recently published a set of guiding principles for public reporting of provider performance [57], but they do not address the importance of patient-centeredness nor consideration of the potential for gaming. The Choosing Wisely campaign recognizes that quality is diminished when there is overtreatment, but selection of measures here, too, is problematic. Many quality measures will need to be based not on receipt of a test or a drug or pushing a risk factor below a target threshold but on facilitating an informed decision. Recent IOM recommendations for the development of guidelines provide a framework for developing quality measures [58].

We must not continue to mismeasure quality by prioritizing time - and cost-effectiveness over principles of patient-centeredness, evidence-based interventions, and transparency. Substantial resources are invested in public quality efforts that suggest progress, but implementing inappropriate measures is counterproductive, undermines the professionalism of dedicated clinicians, and erodes patient trust. The above principles are offered to help identify what is important for health, i.e., care that matters, so we may then develop quality measures more likely to reflect and enhance the quality of care provided, while minimizing opportunities for distortions such as gaming and avoiding the opportunity costs associated with efforts to optimize surrogate endpoints.

Supporting Information

Zdroje

1. Donabedian A. Evaluating the quality of medical care. Milbank Mem Fund Q. 1966/07/01 ed. 1966;44: Suppl:166–206.

2. Woolf SH, Aron LY. The US health disadvantage relative to other high-income countries: findings from a National Research Council/Institute of Medicine report. Jama. 2013/01/12 ed. 2013;309 : 771–772. doi: 10.1001/jama.2013.91 23307023

3. Lindenauer PK, Lagu T, Ross JS, Pekow PS, Shatz A, Hannon N, et al. Attitudes of hospital leaders toward publicly reported measures of health care quality. JAMA Intern Med. American Medical Association; 2014;174 : 1904–11.

4. American Association of Medical Colleges. Guiding Principles for Public Reporting of Provider Performance. 2014 [cited 2 Apr 2015]. http://perma.cc/27BL-G6TY

5. Burwell SM. Setting Value-Based Payment Goals—HHS Efforts to Improve U.S. Health Care. N Engl J Med. 2015;372 : 897–9. doi: 10.1056/NEJMp1500445 25622024

6. Better, Smarter, Healthier: In historic announcement, HHS sets clear goals and timeline for shifting Medicare reimbursements from volume to value. U.S. Department of Health and Human Services; 2015 [cited 2 Apr 2015]. http://www.hhs.gov/news/press/2015pres/01/20150126a.html

7. Medicare Payment Advisory Commission. CMS List of Measures under Consideration for December 1, 2014. 2015. http://perma.cc/FY9C-RJPU

8. Glasziou PP, Buchan H, Del Mar C, Doust J, Harris M, Knight R, et al. When financial incentives do more good than harm: a checklist. Bmj. 2012/08/16 ed. 2012;345: e5047–e5047. doi: 10.1136/bmj.e5047 22893568

9. Micheel C, Ball J, Institute of Medicine (U.S.). Committee on Qualification of Biomarkers and Surrogate Endpoints in Chronic Disease. Evaluation of biomarkers and surrogate endpoints in chronic disease. Washington, D.C.: National Academies Press; 2010.

10. Eddy DM, Adler J, Morris M. The “global outcomes score”: a quality measure, based on health outcomes, that compares current care to a target level of care. Health Aff (Millwood). 2012;31 : 2441–50.

11. Baker DW, Qaseem A. Evidence-based performance measures: Preventing unintended consequences of quality measurement. Annals of Internal Medicine. 2011155 : 638–640.

12. Martin SA, Boucher M, Wright JM, Saini V. Mild hypertension in people at low risk. BMJ. 2014;349: g5432. doi: 10.1136/bmj.g5432 25224509

13. Krumholz HM, Lin Z, Keenan PS, Chen J, Ross JS, Drye EE, et al. Relationship between hospital readmission and mortality rates for patients hospitalized with acute myocardial infarction, heart failure, or pneumonia. JAMA. 2013/02/14 ed. 2013;309 : 587–93. doi: 10.1001/jama.2013.333 23403683

14. Saver BG, Wang C, Dobie SA, Green PK, Baldwin L. The Central Role of Comorbidity in Predicting Ambulatory Care Sensitive Hospitalisations. Eur J Public Health. 2014 Feb;24(1):66–72 doi: 10.1093/eurpub/ckt019 23543676

15. Miller AB, Wall C, Baines CJ, Sun P, To T, Narod SA. Twenty five year follow-up for breast cancer incidence and mortality of the Canadian National Breast Screening Study: randomised screening trial. BMJ. 2014;348: g366. doi: 10.1136/bmj.g366 24519768

16. Pogach L, Aron D. The Other Side of Quality Improvement in Diabetes for Seniors: A Proposal for an Overtreatment Glycemic Measure. Arch Intern Med. 2012;172 : 1–3.

17. Greenhalgh T, Howick J, Maskrey N. Evidence based medicine: a movement in crisis? Bmj. 2014;348: g3725–g3725. doi: 10.1136/bmj.g3725 24927763

18. Goitein L. Virtual quality: the failure of public reporting and pay-for-performance programs. JAMA Intern Med. 2014;174 : 1912–3. doi: 10.1001/jamainternmed.2014.3403 25285846

19. Romeo GR, Abrahamson MJ. The 2015 Standards for Diabetes Care: Maintaining a Patient-Centered Approach. Ann Intern Med. 2015 Jun 2;162(11):785–6. doi: 10.7326/M15-0385 25798733

20. Kruse GR, Khan SM, Zaslavsky AM, Ayanian JZ, Sequist TD. Overuse of Colonoscopy for Colorectal Cancer Screening and Surveillance. J Gen Intern Med. 2014;30 : 277–283. doi: 10.1007/s11606-014-3015-6 25266407

21. Sleath JD. In pursuit of normoglycaemia: the overtreatment of type 2 diabetes in general practice. Br J Gen Pract. 2015;65 : 334–335. doi: 10.3399/bjgp15X685525 26120106

22. Shaughnessy AF, Erlich DR, Slawson DC. Type 2 Diabetes: Updated Evidence Requires Updated Decision Making. Am Fam Physician. 2015;92 : 22. 26132122

23. Chernew ME, Mechanic RE, Landon BE, Safran DG. Private-payer innovation in Massachusetts: the “alternative quality contract”. Health Aff (Millwood). 2011/01/07 ed. 2011;30 : 51–61.

24. National Committee for Quality Assurance. Desirable Attributes of HEDIS. http://perma.cc/S5VD-QLLX

25. RTI International. Accountable Care Organization 2012 Program Analysis: Quality Performance Standards Narrative Measure Specifications. Final Report. Baltimore, MD, MD: Centers for Medicare & Medicaid Services; 2012. http://perma.cc/PG72-CQVL

26. Oxman AD. Improving the health of patients and populations requires humility, uncertainty, and collaboration. JAMA. 2012;308 : 1691–2. doi: 10.1001/jama.2012.14477 23093168

27. Qaseem A, Hopkins RH Jr., Sweet DE, Starkey M, Shekelle P, Phys AC. Screening, Monitoring, and Treatment of Stage 1 to 3 Chronic Kidney Disease: A Clinical Practice Guideline From the American College of Physicians. Ann Intern Med. 2013;159 : 835+. 24145991

28. Screening for colorectal cancer: U.S. Preventive Services Task Force recommendation statement. Ann Intern Med. 2008;149 : 627–37. 18838716

29. Screening for breast cancer: U.S. Preventive Services Task Force recommendation statement. Ann Intern Med. 2009;151 : 716–26, W–236. doi: 10.7326/0003-4819-151-10-200911170-00008 19920272

30. Mancia G, Fagard R, Narkiewicz K, Redon J, Zanchetti A, Böhm M, et al. 2013 ESH/ESC guidelines for the management of arterial hypertension: The Task Force for the management of arterial hypertension of the European Society of Hypertension (ESH) and of the European Society of Cardiology (ESC). Eur Heart J. 2013;34 : 2159–2219. doi: 10.1093/eurheartj/eht151 23771844

31. American Diabetes Association. Standards of Medical Care in Diabetes. Diabetes Care. 2015;38, Supple: S1–S94.

32. Stone NJ, Robinson JG, Lichtenstein AH, Goff DC, Lloyd-Jones DM, Smith SC, et al. Treatment of blood cholesterol to reduce atherosclerotic cardiovascular disease risk in adults: synopsis of the 2013 American College of Cardiology/American Heart Association cholesterol guideline. Ann Intern Med. 2014;160 : 339–43. doi: 10.7326/M14-0126 24474185

33. Kmietowicz Z. Evidence to back dementia screening is still lacking, committee says. BMJ. 2015;350: h295. doi: 10.1136/bmj.h295 25596537

34. Woolhandler S, Ariely D, Himmelstein DU. Why pay for performance may be incompatible with quality improvement. Bmj. 2012;345: e5015–e5015. doi: 10.1136/bmj.e5015 22893567

35. Eddy DM, Adler J, Patterson B, Lucas D, Smith KA, Morris M. Individualized guidelines: the potential for increasing quality and reducing costs. Ann Intern Med. 2011;154 : 627–34. doi: 10.7326/0003-4819-154-9-201105030-00008 21536939

36. Guthrie B, Payne K, Alderson P, McMurdo MET, Mercer SW. Adapting clinical guidelines to take account of multimorbidity. Bmj. 2012/10/06 ed. 2012;345: e6341. doi: 10.1136/bmj.e6341 23036829

37. McGlynn EA, Schneider EC, Kerr EA. Reimagining quality measurement. N Engl J Med. 2014;371 : 2150–3. doi: 10.1056/NEJMp1407883 25470693

38. McShane M, Mitchell E. Person centred coordinated care: where does the QOF point us? BMJ. 2015;350: h2540. doi: 10.1136/bmj.h2540 26067130

39. Loxterkamp D. Humanism in the time of metrics—an essay by David Loxterkamp. BMJ. 2013;347: f5539. doi: 10.1136/bmj.f5539 24052583

40. Reichard J. Medicare Doc Payment Bill May Be Vehicle to Tighten Quality Measurement. In: Commonwealth Fund, Washington Health Policy Week in Review. 2013. http://perma.cc/44MD-RFDB

41. Robinson E, Blumenthal D, McGinniss JM. Vital signs: core metrics for health and health care progress. Washington DC: National Academies Press; 2015.

42. Blumenthal D, McGinnis JM. Measuring Vital Signs: an IOM report on core metrics for health and health care progress. JAMA. 2015;313 : 1901–2. doi: 10.1001/jama.2015.4862 25919301

43. Madara JL, Burkhart J. Professionalism, Self-regulation, and Motivation: How Did Health Care Get This So Wrong? JAMA. 2015 May 12;313(18): 1793–4. doi: 10.1001/jama.2015.4045.25965211

44. Chew-Graham CA, Hunter C, Langer S, Stenhoff A, Drinkwater J, Guthrie EA, et al. How QOF is shaping primary care review consultations: a longitudinal qualitative study. BMC Fam Pract. 2013;14 : 103. doi: 10.1186/1471-2296-14-103 23870537

45. McNamara RL, Spatz ES, Kelley TA, Stowell CJ, Beltrame J, Heidenreich P, et al. Standardized Outcome Measurement for Patients With Coronary Artery Disease: Consensus From the International Consortium for Health Outcomes Measurement (ICHOM). J Am Heart Assoc. 2015 May 19;4(5). pii: e001767. doi: 10.1161/JAHA.115.001767

46. Farne H. Publishers’ charges for scoring systems may change clinical practice. BMJ. 2015;351: h4325. doi: 10.1136/bmj.h4325 26269463

47. Davidoff F. On the Undiffusion of Established Practices. JAMA Intern Med. 2015 May;175(5):809–11 doi: 10.1001/jamainternmed.2015.0167 25774743

48. Mathias JS, Baker DW. Developing quality measures to address overuse. JAMA. 2013;309 : 1897–8. doi: 10.1001/jama.2013.3588 23652520

49. Fiore MC, Baker TB. Should clinicians encourage smoking cessation for every patient who smokes? JAMA. 2013;309 : 1032–3. doi: 10.1001/jama.2013.1793 23483179

50. Mahvan T, Namdar R, Voorhees K, Smith PC, Ackerman W. Clinical Inquiry: which smoking cessation interventions work best? J Fam Pract. 2011;60 : 430–1. 21731922

51. Erlich DR, Slawson DC, Shaughnessy AF. “Lending a hand” to patients with type 2 diabetes: a simple way to communicate treatment goals. Am Fam Physician. 2014;89 : 256, 258. 24695444

52. Khazan O. Why Don’t We Treat Teeth Like the Rest of Our Bodies? In: The Atlantic. 2014 [cited 22 May 2015]. http://perma.cc/4KCG-5LDZ

53. Friedmann PD. Clinical practice. Alcohol use in adults. N Engl J Med. 2013;368 : 365–73. doi: 10.1056/NEJMcp1204714 23343065

54. Volkow ND, Frieden TR, Hyde PS, Cha SS. Medication-assisted therapies—tackling the opioid-overdose epidemic. N Engl J Med. 2014;370 : 2063–6. doi: 10.1056/NEJMp1402780 24758595

55. Harris RP, Wilt TJ, Qaseem A. A value framework for cancer screening: advice for high-value care from the american college of physicians. Ann Intern Med. 2015;162 : 712–7. doi: 10.7326/M14-2327 25984846

56. Hershberger PJ, Bricker DA. Who determines physician effectiveness? JAMA. 2014 Dec 24–31;312 : 2613–4. doi: 10.1001/jama.2014.13304 25317546

57. American Association of Medical Colleges. Guiding Principles for Public Reporting of Provider Performance. 2014. https://www.aamc.org/download/370236/data/guidingprinciplesforpublicreporting.pdf

58. Institute of Medicine (U.S.). Committee on Standards for Developing Trustworthy Clinical Practice Guidelines., Graham R, Mancher M, Wolman DM. Clinical practice guidelines we can trust. Washington, D.C.: National Academies Press; 2011.

Štítky

Interné lekárstvo

Článok vyšiel v časopisePLOS Medicine

Najčítanejšie tento týždeň

2015 Číslo 11- Parazitičtí červi v terapii Crohnovy choroby a dalších zánětlivých autoimunitních onemocnění

- Intermitentní hladovění v prevenci a léčbě chorob

- Statinová intolerance

- Co dělat při intoleranci statinů?

- Monoklonální protilátky v léčbě hyperlipidemií

-

Všetky články tohto čísla

- Dispersion of the HIV-1 Epidemic in Men Who Have Sex with Men in the Netherlands: A Combined Mathematical Model and Phylogenetic Analysis

- Venous Thrombosis Risk after Cast Immobilization of the Lower Extremity: Derivation and Validation of a Clinical Prediction Score, L-TRiP(cast), in Three Population-Based Case–Control Studies

- From Checklists to Tools: Lowering the Barrier to Better Research Reporting

- Care that Matters: Quality Measurement and Health Care

- The Missing Men: HIV Treatment Scale-Up and Life Expectancy in Sub-Saharan Africa

- The First Use of the Global Oral Cholera Vaccine Emergency Stockpile: Lessons from South Sudan

- The PneuCarriage Project: A Multi-Centre Comparative Study to Identify the Best Serotyping Methods for Examining Pneumococcal Carriage in Vaccine Evaluation Studies

- Mass HIV Treatment and Sex Disparities in Life Expectancy: Demographic Surveillance in Rural South Africa

- The HIV Treatment Gap: Estimates of the Financial Resources Needed versus Available for Scale-Up of Antiretroviral Therapy in 97 Countries from 2015 to 2020

- Selection of an HLA-C*03:04-Restricted HIV-1 p24 Gag Sequence Variant Is Associated with Viral Escape from KIR2DL3+ Natural Killer Cells: Data from an Observational Cohort in South Africa

- Shortening Turnaround Times for Newborn HIV Testing in Rural Tanzania: A Report from the Field

- PLOS Medicine

- Archív čísel

- Aktuálne číslo

- Informácie o časopise

Najčítanejšie v tomto čísle- Venous Thrombosis Risk after Cast Immobilization of the Lower Extremity: Derivation and Validation of a Clinical Prediction Score, L-TRiP(cast), in Three Population-Based Case–Control Studies

- The First Use of the Global Oral Cholera Vaccine Emergency Stockpile: Lessons from South Sudan

- The HIV Treatment Gap: Estimates of the Financial Resources Needed versus Available for Scale-Up of Antiretroviral Therapy in 97 Countries from 2015 to 2020

- Selection of an HLA-C*03:04-Restricted HIV-1 p24 Gag Sequence Variant Is Associated with Viral Escape from KIR2DL3+ Natural Killer Cells: Data from an Observational Cohort in South Africa

Prihlásenie#ADS_BOTTOM_SCRIPTS#Zabudnuté hesloZadajte e-mailovú adresu, s ktorou ste vytvárali účet. Budú Vám na ňu zasielané informácie k nastaveniu nového hesla.

- Časopisy