-

Články

- Časopisy

- Kurzy

- Témy

- Kongresy

- Videa

- Podcasty

Core Outcome Set–STAndards for Reporting: The COS-STAR Statement

Paula Williamson and colleagues present reporting standards for studies that report core outcome sets for health research.

Published in the journal: Core Outcome Set–STAndards for Reporting: The COS-STAR Statement. PLoS Med 13(10): e32767. doi:10.1371/journal.pmed.1002148

Category: Guidelines and Guidance

doi: https://doi.org/10.1371/journal.pmed.1002148Summary

Paula Williamson and colleagues present reporting standards for studies that report core outcome sets for health research.

Introduction

There is growing recognition that insufficient attention has been paid to the outcomes measured in clinical trials, which need to be relevant to health service users and other people making choices about health care if the findings of research are to influence practice and future research. In addition, outcome reporting bias, whereby results are selected for inclusion in a trial report on the basis of those results, has been identified as a problem for the interpretation of published data.

The development and implementation of core outcome sets (COS) is drawing increasing attention across all areas of research in health [1] and has relevance for research practice on a global scale. A recent survey reveals that some trialists, systematic reviewers, and guideline developers (COS users) do now refer to COS studies as a starting point for outcome selection in their work [1]. The use of COS can help improve the consistency in outcome measurement and reduce outcome reporting bias, which has led to much unnecessary waste in the production and reporting of research [2].

A recently updated systematic review identified over 200 published COS studies [1], and many more are known to be under development [3]. The first step in COS development is typically “what to measure,” whereas the “how” and “when” usually come later. The value of a COS depends largely on why and how it was developed. The credibility of the group that has developed the COS, in relation to their experience of outcome assessment and how they have engaged with key stakeholders during the development process, may influence subsequent uptake of the COS. Furthermore, the reporting quality of COS development studies is also relevant to implementation. Recent work shows that reporting quality is currently variable [4], restricting the ability of potential users of COS, for example clinical trialists, systematic reviewers, and guideline developers, to assess the relevance to their own work.

A COS has previously been defined as an agreed standardised set of outcomes that should be measured and reported, as a minimum, in all clinical trials in specific areas of health or health care [2]. COS are being developed for settings other than clinical trials. Although previous recommendations have been made regarding the reporting of a Delphi survey component of a COS study [5], it is timely to gain wider consensus given the increasing activity in this area, particularly because various other methods and components are incorporated in the COS development process [1]. In this article, we present the results of a research project, in which the objective was to develop a guideline (Core Outcome Set–STAndards for Reporting [COS-STAR]) for the reporting of studies developing COS using an approach proposed by the Enhancing the QUAlity and Transparency Of health Research (EQUATOR) Network [6]. The reporting checklist is relevant regardless of the consensus methodology used in the development of the COS and can be applied to COS developed for effectiveness trials, systematic reviews, or routine care [7].

Terminology

A COS describes what should be measured in a particular research or practice setting, with subsequent work needed to determine how each outcome should be defined or measured. A previous review found that only 38% of published studies contained recommendations about how to measure the outcomes in the COS [4]. This reporting guideline was developed to address this first stage of development, namely, what should be measured.

Ethical Approval

The University of Liverpool Ethics Committee was consulted and granted ethical approval for this study (Reference RETH000841). Informed consent was assumed if a participant responded to the Delphi survey or agreed to attend the consensus meeting.

Development of the COS-STAR Statement

A full protocol outlining the Delphi procedures for the COS-STAR study was published elsewhere [8], including the intention to produce an associated Explanation and Elaboration (E+E) document in which the meaning and rationale for each checklist item is given.

A preliminary list of 48 reporting items was developed from a previous systematic review of COS studies involving a Delphi survey [5], the personal experiences of COS development, and reporting by the project management group (The COS-STAR Group). This preliminary list of potential reporting items was included in an international two-round Delphi survey in order to ascertain the importance of these reporting items.

The Delphi survey involved four key stakeholder groups, chosen to encompass aspects of COS development, reporting, and uptake. Invitations by email were sent to the following: (i) lead authors of 196 published COS studies in the Core Outcome Measures in Effectiveness Trials (COMET) database, with a request to also forward on the invitation to any methodologist involved; (ii) editors of 250 journals in which COS studies have been published and 70 journals involved with CoRe Outcomes in WomeN’s health ([CROWN], http://www.crown-initiative.org/), an initiative endorsing the uptake of COS; (iii) potential users of COS who were (a) principal investigators of open phase III/IV trials, commercial or non-commercial, registered on clinicaltrials.gov (20% random sample from 8,449 registered trials) or (b) 76 Coordinating Editors from 53 Cochrane Review Groups; and (iv) 33 patient representatives from previous COMET workshops and the COMET Public and Patient Participation, Involvement and Engagement (PoPPIE) Working Group. It did not prove possible to invite clinical guideline developers listed on the Guidelines International Network website (http://www.gin2015.net/about/) due to this being a member organisation, so a question about involvement in clinical guideline development was added to the survey instead. The aim was to recruit as many individuals from each stakeholder group as possible for the Delphi exercise. Participants were sent a personalised email outlining the project as described in the study protocol together with a copy of the first systematic review of COS studies [4].

Delphi participants rated the importance of each reporting item on a scale from 1 (not important) to 9 (critically important) as defined in the protocol [8]. In round one of the Delphi study, participants could suggest new reporting items to be included in the second round. In round two, each participant who participated in round one was shown the number of respondents and distribution of scores for each item for all stakeholder groups separately, together with their own score from round one. An additional nine reporting items were suggested in round one of the Delphi exercise and were scored in round two. Consensus, defined a priori [8], was achieved if at least 70% of the voting participants from each stakeholder group scored between 7 and 9. COS developers (n = 25), COS users (n = 107), medical journal editors (n = 40), and patient representatives (n = 11) participated in both rounds, with 13 individuals also having been involved in clinical guideline development. The variable number of respondents per stakeholder group did not affect the results, because feedback in round two was presented by group. The Delphi process was conducted and managed using DelphiManager software developed by the COMET Initiative (http://www.comet-initiative.org/delphimanager/). The anonymised data from both rounds of the Delphi process, itemised by stakeholder group, are available in S1 Delphi Data.

The consensus meeting was a one-day event held in London, United Kingdom, in January 2016, with 17 international participants, including COS developers (n = 6), medical journal editors (n = 4), COS users (n = 5) [trialists (n = 1), Cochrane systematic review co-editors (n = 2), and clinical guideline developers (n = 2)], and patient representatives (n = 2). Individuals were selected to be invited to the meeting using the following broad principles: (i) Delphi participants who completed both rounds; (ii) a balance across the four stakeholder groups; (iii) a balance amongst COS developers of those using Delphi surveys and those using other methods; (iv) a balance across COS users of trialists, systematic reviewers, and guideline developers; and (v) a reasonable geographic spread. Initial invitations were determined by the authors based on their knowledge of individuals’ expertise. If an individual could not attend, they were replaced by someone else from the same stakeholder group wherever possible.

The roles of each participant were not mutually exclusive, and there was a mix of clinical and methodological experience. The objective of the meeting was to discuss and vote on the series of 57 reporting items thought to be potentially important for inclusion in the COS-STAR checklist. Three additional participants (one facilitator and two note takers) attended the meeting but did not participate in the discussion or voting.

The Delphi results for all 57 reporting items were presented to the consensus meeting participants prior to and during the meeting (S1 Consensus Matrix Delphi). A copy of the consensus meeting slides showing the response rates, geographical distribution, and Delphi round two results for each stakeholder group can be found in S1 Consensus Meeting Presentation. Although the response rate to the survey was low, over 86% (183/214) of round one participants completed round two, with no evidence of attrition bias between rounds (S1 Consensus Meeting Presentation).

Members of the COS-STAR group (study authors) who could not attend the meeting were contacted in advance to discuss the results; their comments were documented and fed into the meeting discussion. Following presentation of the Delphi result for each potential reporting item, the item was discussed and then voted on by the meeting participants, and retained if more than 70% of the voting participants (at least 12 of the 17 voting participants) scored between 7 and 9. Voting was undertaken using OMBEA response (http://www.ombea.com/). During discussion of the second Consensus Process item, “Description of what information was presented to participants about the consensus process at its start,” one participant commented on the absence of an item related to ethical approval. The group agreed to vote on this, and consensus was achieved that this item should be included in the reporting guideline (S1 Consensus Meeting Critical Scores). This issue has become more relevant as the inclusion of patients as participants in COS development has increased.

Discussion of the format of the reporting checklist was given consideration by the management group. It was confirmed that the required items would be relevant to the reporting of a COS regardless of whether it had been developed for effectiveness trials, systematic reviews, or routine care. A word of warning was given by those participants at the meeting with previous experience of reporting guideline development: avoid making the first guideline too stringent and risk developers not using it. The need to merge some reporting items and create some sub-items was noted, together with suggestions for an explanatory document to enhance the usefulness of the final COS-STAR checklist. Specifically, the following amendments were made by the COS-STAR group after the meeting:

(1) Participants (methods): The four items under this topic were considered to overlap. The suggestion was made, and accepted by the group, that one item covering the sub-items of who, how, and why would be more meaningful.

(2) Consensus process: There was general agreement that although all five items under this topic could provide useful information, some were too specific to be included, acknowledging that there is no gold standard approach. The suggestion was made, and accepted by the group, that one item covering the description of the consensus process would more likely be followed.

(3) Outcome scoring: a suggestion was made to include both aspects in a single item.

(4) Participants (results): a suggestion was made to combine these issues into a single item.

(5) Outcome results: there was general agreement that these multiple items were too detailed and should be merged together, elaborating on the issues in the E+E document (S1 Explanation and Elaboration).

(6) Limitations: it was agreed that it was good practice to include an item related to limitations and include the examples provided in the E+E document.

Following the meeting, a draft of the COS-STAR checklist was circulated to the COS-STAR group and the remainder of the consensus meeting attendees. All comments and revisions were taken into consideration and the checklist revised accordingly. The process of obtaining feedback and refining the checklist was repeated until no further changes were needed.

The comprehension of the final checklist items was reviewed by two expert guideline developers (DGA, DM). Testing was undertaken by two COS developers who were writing up their COS studies. Two researchers currently developing COS also reviewed the statement. Testers were independent of the COS-STAR development process. Feedback from this exercise informed the final version of the COS-STAR checklist.

The COS-STAR Statement

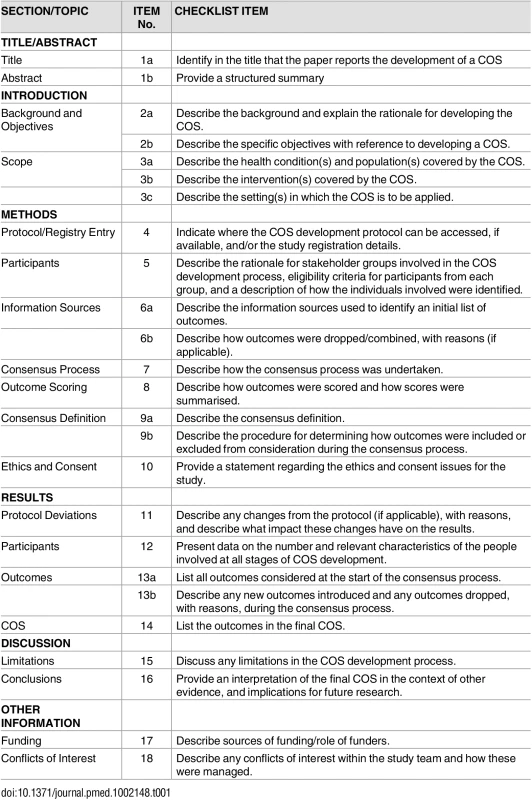

The 18-item COS-STAR checklist presented in Table 1 applies to COS development studies for which the aim is to decide which outcomes should be included in the COS and does not extend to cover the reporting of work to determine how those outcomes should be defined or measured. The checklist aims to cover the minimum reporting requirements related to the background, scope, methods, results, conclusion, and limitations of such studies. In the accompanying E+E document (S1 Explanation and Elaboration), explanations are provided for the meaning and rationale for each checklist item. The checklist is designed to be applicable regardless of consensus methodology used to develop the COS (inclusive of mixed methods) and the various participant groups who may have been involved in selecting outcomes (inclusive of patient representatives), as identified in a previous systematic review [4]. The COS-STAR checklist provides guidance for minimal COS study reporting, but, in certain circumstances, additional reporting items may be warranted at the discretion of the study authors. For example, study authors may wish to describe the steps in deciding how to measure the core outcomes if this was considered [9].

Tab. 1. Core Outcome Set-STandards for Reporting: The COS-STAR Statement.

Discussion

The COS-STAR checklist was developed using an approach that has been recommended for developing medical reporting guidelines [6]. The intention of the COS-STAR checklist is to promote the transparency and completeness of reporting of COS studies such that COS users can judge whether the recommended set is relevant to their work. For example, although the adequacy of the description of the scope of a COS is increasing [1], further improvement is needed in order for uptake to be maximised and, in turn, assessed.

The COS-STAR E+E document (S1 Explanation and Elaboration) was developed to provide an explanation of each of the COS-STAR checklist items and to provide a framework for good reporting practices, with examples, for those interested in conducting and reporting COS development work. It follows a similar format to that used in other explanatory documents [10,11]. The E+E document also describes the endorsement and implementation strategies planned for the COS-STAR Statement.

COS-STAR is not a quality assessment tool and should not be used in this way, for example, to compare the validity of similar related COS. Similarly, the checklist does not make recommendations about which methodology should be used or which stakeholder groups to include to reach consensus in COS development projects; guidance on such issues can be found elsewhere [2,12]. As an example, several studies have looked at developing COS in childhood asthma, each using different methodology and proposing slight variations in the core outcomes [13–16]. The COS-STAR Statement would not distinguish which of these COS should be used, although it may be useful for critical appraisal of published COS.

As with similar reporting guidelines [17] that have undergone several revisions, COS-STAR is an evolving guideline and may well require modification in the future. The consensus meeting participants acknowledged that there is limited empirical evidence and methodological development relating to some of the reporting items that were considered for inclusion and chose to exclude those items until these issues are better understood. As an example, there is some evidence that the method of feedback does influence how people score outcomes [18], which perhaps suggests that this is important information to report. However, until there is better guidance on how COS developers should present feedback, the item was excluded from the current reporting checklist.

The guideline may also require modification as new stakeholder groups with relevant interests and experience emerge. For example, regulators have recently recommended the use of COS in trials of medicinal products in patients with asthma [19] and will be encouraged to provide feedback on the statement. The geographical spread of participants in the Delphi survey, and consequently the consensus meeting, is representative of COS study developers, being predominantly North American and European [4]. This is recognised as a limitation, however, of both COS study development and this reporting guideline, given the equal relevance to low - and middle-income countries. Patient representatives, rather than patients, were invited to participate. Due to this being a relatively complex area of methodology, individuals known to have had some level of involvement with COS development were selected, thereby increasing their understanding. As patient involvement and participation in COS development increases, their contribution to a future revision of this reporting guideline will be sought. An important objective of the COMET Initiative is to promote wider involvement.

Although the acceptance rate to the Delphi invitation may appear low, participation in round two was above 85% in each stakeholder group, with no evidence of attrition bias (S1 Consensus Meeting Presentation). Readers are invited to submit comments, criticisms, experiences, and recommendations via the COMET website (http://www.comet-initiative.org/contactus), which will be considered for future refinement of the COS-STAR Statement.

Supporting Information

Zdroje

1. Gorst SL, Gargon E, Clarke M, Blazeby JM, Altman DG, Williamson PR. Choosing Important Health Outcomes for Comparative Effectiveness Research: An Updated Review and User Survey. PLoS ONE. 2016; 11(1): e0146444. doi: 10.1371/journal.pone.0146444 26785121

2. Williamson PR, Altman DG, Blazeby JM, Clarke M, Devane D, Gargon E et al. Developing core outcome sets for clinical trials: issues to consider. Trials. 2012; 13 : 132. doi: 10.1186/1745-6215-13-132 22867278

3. COMET Initiative. 2016. http://www.comet-initiative.org/. [Accessed 5 May 2016].

4. Gargon E, Gurung B, Medley N, Altman DG, Blazeby JM, Clarke M et al. Choosing Important Health Outcomes for Comparative Effectiveness Research: A Systematic Review. PLoS ONE. 2014; 9(6): e99111. doi: 10.1371/journal.pone.0099111 24932522

5. Sinha I, Smyth RL, Williamson PR. Using the Delphi technique to determine which outcomes to measure in clinical trials: recommendations for the future based on a systematic review of existing studies. PLoS Med. 2011; 8(1): e1000393. doi: 10.1371/journal.pmed.1000393 21283604

6. Moher D, Schulz KF, Simera I, Altman DG. Guidance for developers of health research reporting guidelines. PloS Med. 2010; 7(2): e1000217. doi: 10.1371/journal.pmed.1000217 20169112

7. Clarke M and Williamson PR. Core outcome sets and systematic reviews. Systematic Reviews. 2016; 5 : 11. doi: 10.1186/s13643-016-0188-6 26792080

8. Kirkham JJ, Gorst S, Altman DG, Blazeby J, Clarke M, Devane D et al. COS-STAR: a reporting guideline for studies developing core outcome sets (protocol). Trials. 2015; 16 : 373. doi: 10.1186/s13063-015-0913-9 26297658

9. Schmitt J, Spuls PI, Thomas KS, Simpson E, Furue M, Deckert S et al. The Harmonising Outcome Measures for Eczema (HOME) statement to assess clinical signs of atopic eczema in trials. J Allergy Clin Immunol. 2014; 134 : 800–807. doi: 10.1016/j.jaci.2014.07.043 25282560

10. Moher D, Hopewell S, Schulz KF, Montori V, Gøtzsche PC, Devereaux PJ et al. CONSORT 2010 Explanation and Elaboration: updated guidelines for reporting parallel group randomised trial. BMJ. 2010; 340:c869. doi: 10.1136/bmj.c869 20332511

11. Liberati A, Altman DG, Tetzlaff J, Mulrow C, Gøtzsche PC, Ioannidis J et al. The PRISMA Statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: Explanation and Elaboration. PLoS Med. 2009;6(7):e1000100. doi: 10.1371/journal.pmed.1000100 19621070

12. Schmitt J, Apfelbacher C, Spuls PI, Thomas KS, Simpson E, Furue M et al. The Harmonizing Outcome Measures for Eczema (HOME) roadmap: A methodological framework to develop core sets of outcome measurements in dermatology. Journal of Investigative Dermatology. 2015; 135 (1):24–30. doi: 10.1038/jid.2014.320 25186228

13. Smith MA, Leeder SR, Jalaludin B, Smith WT. The asthma health outcome indicators study. Australian and New Zealand Journal of Public Health. 1996; 20 (1): 69–75. doi: 10.1111/j.1467-842X.1996.tb01340.x 8799071

14. Sinha I, Gallagher R, Williamson PR, Smyth RL. Development of a core outcome set for clinical trials in childhood asthma: a survey of clinicians, parents, and young people. Trials. 2012; 13 : 103. doi: 10.1186/1745-6215-13-103 22747787

15. Reddel HK, Taylor DR, Bateman ED, Boulet LP, Boushey HA, Busse WW et al. An official American Thoracic Society/European Respiratory Society statement: asthma control and exacerbations: standardizing endpoints for clinical asthma trials and clinical practice. Am J Respir Crit Care Med. 2009; 180(1):59–99. doi: 10.1164/rccm.200801-060ST 19535666

16. Busse WW, Morgan WJ, Taggart V, Togias A. Asthma outcomes workshop: overview. Journal of Allergy & Clinical Immunology. 2012; 129 (3) Suppl: S1–8. doi: 10.1016/j.jaci.2011.12.985

17. Schulz KF, Altman DG, Moher D, for the CONSORT Group. CONSORT 2010 Statement: updated guidelines for reporting parallel group randomised trials. PLoS Med. 2010;7(3): e1000251. doi: 10.1371/journal.pmed.1000251 20352064

18. Brookes S, Macefield R, Williamson P, McNair A, Potter S, Blencowe N et al. Three nested RCTs of dual or single stakeholder feedback within Delphi surveys during core outcome and information set development. Trials. 2015 Nov 16;16(Suppl 2):P51

19. Committee for Medicinal Products for Human Use (CHMP) (2015). Guideline on the clinical investigation of medicinal products for the treatment of asthma. CHMP/EWP/2922/01 Rev.1. http://www.ema.europa.eu/docs/en_GB/document_library/Scientific_guideline/2015/12/WC500198877.pdf

Štítky

Interné lekárstvo

Článok vyšiel v časopisePLOS Medicine

Najčítanejšie tento týždeň

2016 Číslo 10- Parazitičtí červi v terapii Crohnovy choroby a dalších zánětlivých autoimunitních onemocnění

- Nech brouka žít… Ať žije astma!

- Intermitentní hladovění v prevenci a léčbě chorob

- Co dělat při intoleranci statinů?

- Monoklonální protilátky v léčbě hyperlipidemií

-

Všetky články tohto čísla

- Sailing in Uncharted Waters: Carefully Navigating the Polio Endgame

- Core Outcome Set–STAndards for Reporting: The COS-STAR Statement

- Improving the Science of Measles Prevention—Will It Make for a Better Immunization Program?

- Burden of Six Healthcare-Associated Infections on European Population Health: Estimating Incidence-Based Disability-Adjusted Life Years through a Population Prevalence-Based Modelling Study

- Population Immunity against Serotype-2 Poliomyelitis Leading up to the Global Withdrawal of the Oral Poliovirus Vaccine: Spatio-temporal Modelling of Surveillance Data

- Conveying Equipoise during Recruitment for Clinical Trials: Qualitative Synthesis of Clinicians’ Practices across Six Randomised Controlled Trials

- How Relevant Is Sexual Transmission of Zika Virus?

- A Holistic, Person-Centred Care Model for Victims of Sexual Violence in Democratic Republic of Congo: The Panzi Hospital One-Stop Centre Model of Care

- Impact on Epidemic Measles of Vaccination Campaigns Triggered by Disease Outbreaks or Serosurveys: A Modeling Study

- The Global Burden of Latent Tuberculosis Infection: A Re-estimation Using Mathematical Modelling

- Circulating Apolipoprotein E Concentration and Cardiovascular Disease Risk: Meta-analysis of Results from Three Studies

- Towards Equity in Service Provision for Gay Men and Other Men Who Have Sex with Men in Repressive Contexts

- Obstetric Facility Quality and Newborn Mortality in Malawi: A Cross-Sectional Study

- Prophylactic Oral Dextrose Gel for Newborn Babies at Risk of Neonatal Hypoglycaemia: A Randomised Controlled Dose-Finding Trial (the Pre-hPOD Study)

- Characterization of Novel Antimalarial Compound ACT-451840: Preclinical Assessment of Activity and Dose–Efficacy Modeling

- Regulatory T Cell Responses in Participants with Type 1 Diabetes after a Single Dose of Interleukin-2: A Non-Randomised, Open Label, Adaptive Dose-Finding Trial

- Orthostatic Hypotension and the Long-Term Risk of Dementia: A Population-Based Study

- Tradeoffs in Introduction Policies for the Anti-Tuberculosis Drug Bedaquiline: A Model-Based Analysis

- Whole Genome Sequence Analysis of a Large Isoniazid-Resistant Tuberculosis Outbreak in London: A Retrospective Observational Study

- Texas and Its Measles Epidemics

- Impacts on Breastfeeding Practices of At-Scale Strategies That Combine Intensive Interpersonal Counseling, Mass Media, and Community Mobilization: Results of Cluster-Randomized Program Evaluations in Bangladesh and Viet Nam

- The Tuberculosis Cascade of Care in India’s Public Sector: A Systematic Review and Meta-analysis

- PLOS Medicine

- Archív čísel

- Aktuálne číslo

- Informácie o časopise

Najčítanejšie v tomto čísle- Prophylactic Oral Dextrose Gel for Newborn Babies at Risk of Neonatal Hypoglycaemia: A Randomised Controlled Dose-Finding Trial (the Pre-hPOD Study)

- Regulatory T Cell Responses in Participants with Type 1 Diabetes after a Single Dose of Interleukin-2: A Non-Randomised, Open Label, Adaptive Dose-Finding Trial

- Orthostatic Hypotension and the Long-Term Risk of Dementia: A Population-Based Study

- Improving the Science of Measles Prevention—Will It Make for a Better Immunization Program?

Prihlásenie#ADS_BOTTOM_SCRIPTS#Zabudnuté hesloZadajte e-mailovú adresu, s ktorou ste vytvárali účet. Budú Vám na ňu zasielané informácie k nastaveniu nového hesla.

- Časopisy